• 5 minute read • By

Luca Dubies

GPU Virtualization

Introduction

My first big project here at Noelscher Consulting was to get a certain workload running on some VMs on our own hardware. (What workload? Not your business!) While this included industry standard technologies like docker and the ESXi Hypervisor, there were some uncertainties at the beginning. Especially because the application running in the containers required Windows and GPU ressources.

We wanted to examine GPU supported containers and test our own hardware setup for future projects. In this series of blogs we will explore some of the hurdles we encountered on our way to having a scalable, GPU dependent workload running on Windows VMs.

We like to host many of our services locally at Noelscher Consulting. Therefor the first task was to get the machines ready for the containers to run on.

SR-IOV

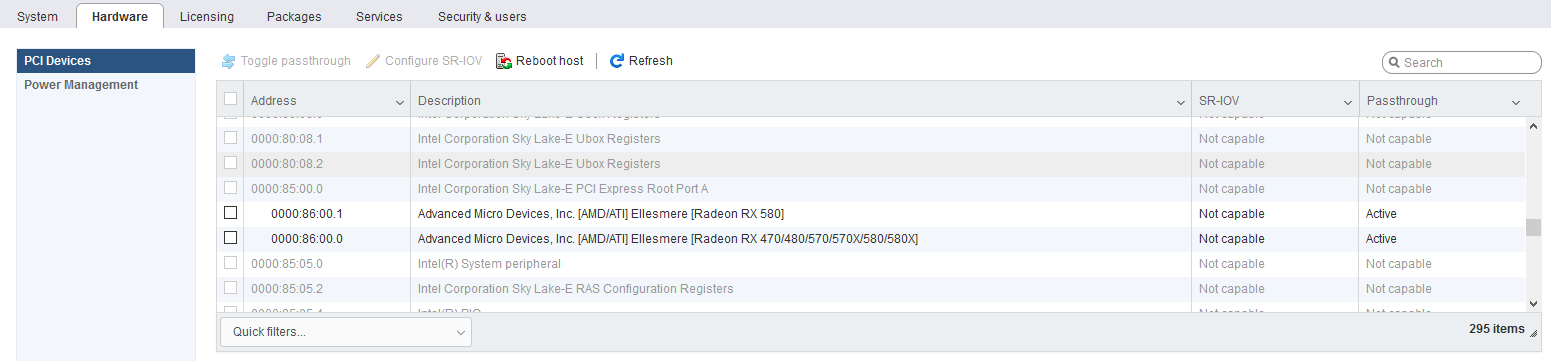

The GPUs we had dedicated to the project initially were two AMD GPUs we installed into our ESXi server. They are supposed to support SR-IOV, and we were exited to work with it. SR-IOV allows multiple guest Systems to access a PCI-Express device, in our case the GPUs. But the driver installation on any VM proved to be problematic. Constant crashes and freezes prevented us from having an even remotely usable system for testing. Researching for solutions, we found that apparently there were issues with our GPU retailers BIOS regarding SR-IOV support. Not wanting to waste any time, the decision was made to use traditional Passthrough.

Configuring devices for SR-IOV or Passthrough in ESXi

GPU Passthrough

Using Passthrough, you directly link the device to a guest VM. As our hardware setup did not require much flexibility and we could fully designate those 2 GPUs to our project, just using Passthrough compared to the more flexible SR-IOV was not an issue for us.

Two VMs running Windows 10 were quickly setup on the ESXi host, and after running one GPU in Passthrough, we had good benchmark results on our first VM. Everything seemed like we could move on from hardware to start dockerizing our workload. Problems started to arise when we wanted to get the second GPU running at the same time. If one VM was running, the second one would not boot up, with Windows claiming hardware errors.

To inquire where our issue was originating from, we decided to test a set of two different GPUs (specifically R580Xs). But again the same problem was bugging us. We were not able to get our two GPUs successfully running in Passthrough at the same time. We even discussed ordering another pair of GPUs or use GPUs from other systems for more testing. Luckily we found hints at what we were doing wrong before that.

Our Solution

Looking back, the root of our issue was decidedly too mundane. Two GPUs of the same model plain and simple dont have unique identifiers for the Passthrough. While we stumbled on interesting articles and discussions from people facing similar issues, we decided to just grab a TITAN, remove one of our R580Xs and install it instead. And voila, in no time we had two VMs with dedicated GPUs up and running, waiting to execute our dynamically sheduled containers.

What We’ve Learned

The age old instinct of buying and working with multiples of the same hardware, like HDDs for a RAID or GPUs for SLI/Crossfire, was definitely no help in our case. But working with different GPU models is not an elegant solution either, as it can obviously lead to different loads that your guest systems can handle. With GPU Virtualization, SR-IOV generally seems the way to go for the future, as the ressource can be dynamically accessed by whichever workload you need it for.

Related Topics

Part 2: Build Windows Containers for GPU support

Part 3: Dynamically Start Workload Containers